Survey data is only as valuable as the quality of the responses collected. Unfortunately, inattentive respondents can compromise the integrity of research, leading to misleading conclusions. To combat this issue, researchers and survey administrators must take proactive steps to engage participants, set clear expectations, and implement strategies that promote accurate data collection. Below are key tactics to improve survey engagement, maintain attentiveness, and ensure high-quality responses.

One of the most effective ways to enhance respondent attentiveness is by optimizing the survey itself. Thoughtful design, engaging content, and user-friendly navigation can significantly reduce inattentiveness. By focusing on survey structure and delivery, researchers can create an experience that encourages respondents to provide accurate and meaningful responses.

Here are some key approaches to preventing survey respondent inattentiveness.

Surveys should be as brief as possible while still collecting necessary data. Long and monotonous surveys can lead to respondent fatigue, causing disengagement. Using clear and simple language helps respondents understand questions easily.

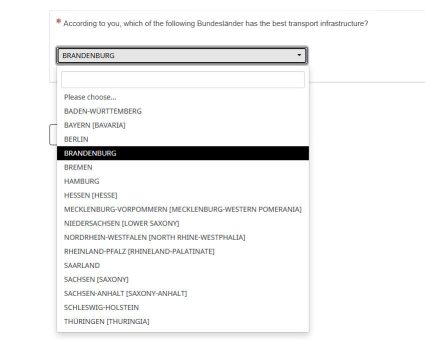

Additionally, incorporating visual elements or interactive questions can maintain engagement and prevent boredom. Instead of using a multiple-choice format for every question, consider using a drop-down option for questions that may have a long list of possible responses. This can help streamline the survey and reduce visual clutter.

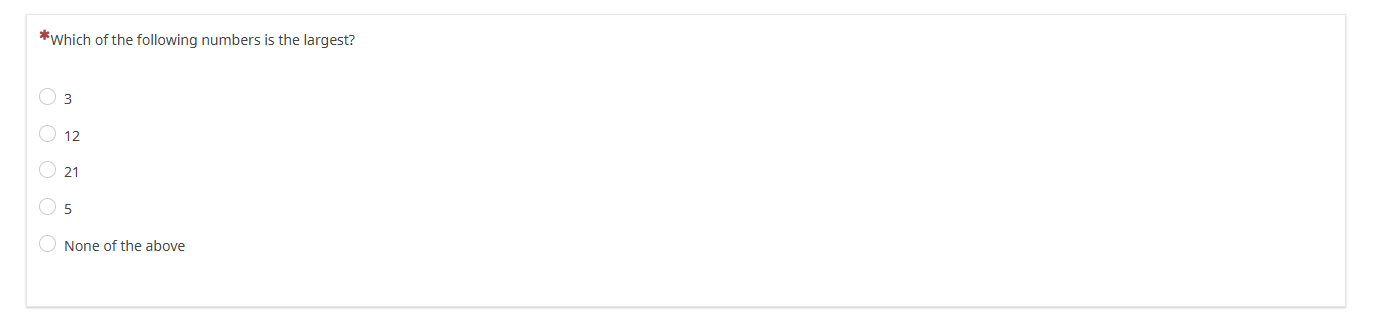

Including attention-check questions helps identify and filter out inattentive respondents. These checks can take the form of explicit instructions (e.g., “Select ‘Strongly Disagree’ for this question to show you are paying attention”), reverse-coded items, or implausible response options that require thoughtful selection.

For instance, a question may ask respondents to choose the largest number from a set of options such as [3, 5, 15, 21, None of the above]. An inattentive respondent may select an incorrect answer randomly, while an attentive one would correctly identify 21 as the largest number.

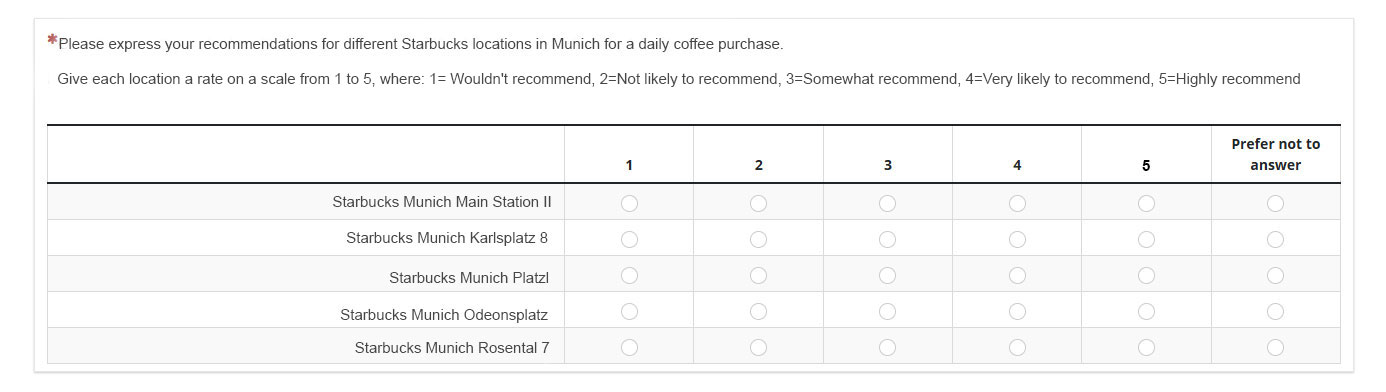

Repetition can cause respondents to lose interest or respond mechanically. Instead, varying question formats and rotating wording while maintaining consistency in measurement can sustain engagement and encourage more thoughtful responses.

One effective method is using a tabular view for responses covering the same topic in different areas. For example, instead of asking separate questions about satisfaction with different store locations, a table format could present a quick overview and option to answer quickly and effectively.

This approach streamlines responses, reduces redundancy, and keeps respondents engaged.

A well-structured survey with clear formatting and spacing helps prevent respondents from skimming through questions. Grouping related questions logically makes navigation easier, while progress indicators provide a sense of completion, encouraging respondents to stay engaged.

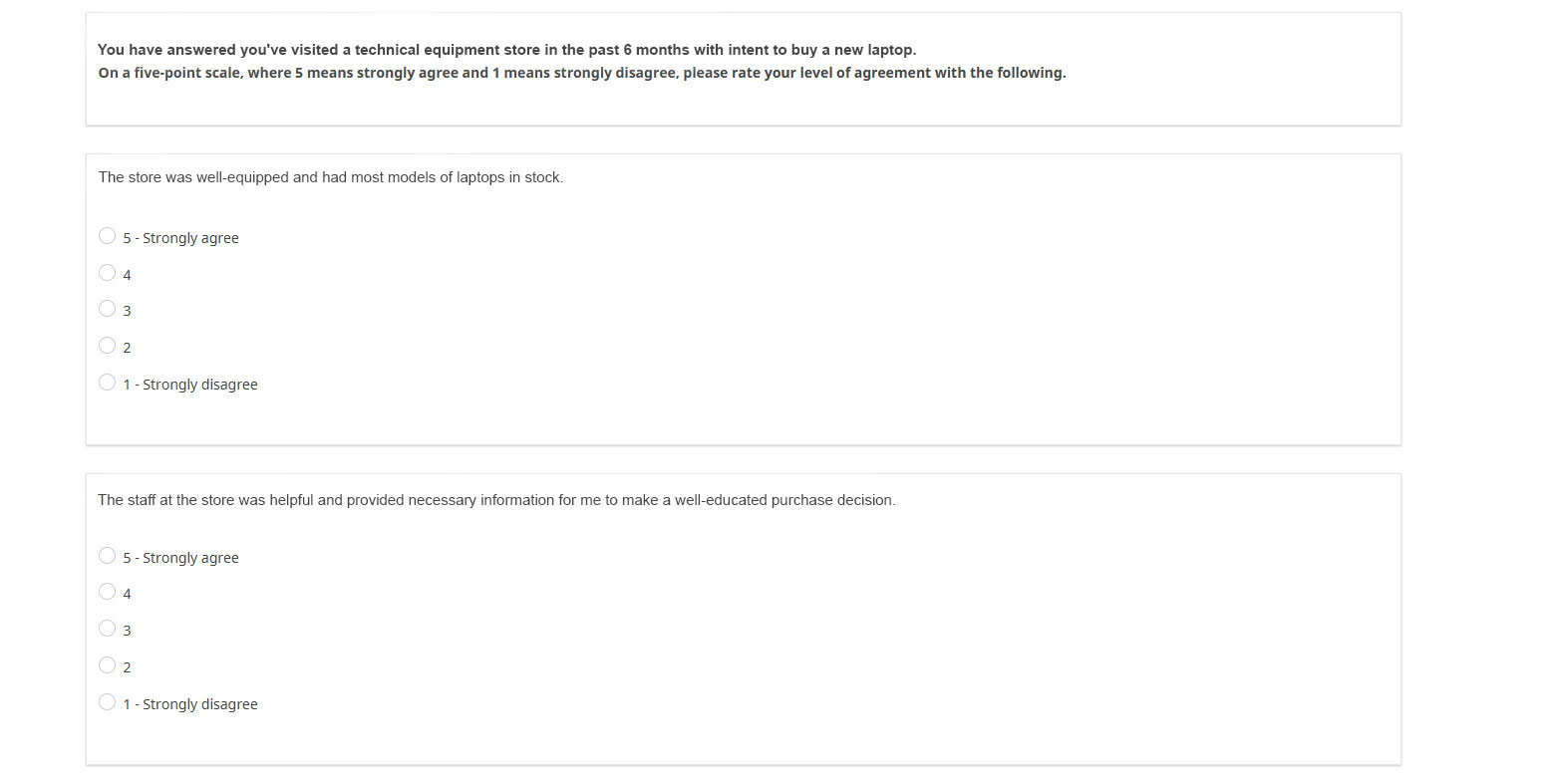

For example, a survey about customer satisfaction could begin with a scenario describing a recent store visit, followed by questions asking respondents to rate aspects of their experience such as staff helpfulness, product availability, and checkout efficiency.

This structured approach keeps respondents engaged and ensures their answers are contextually relevant. Additionally, progress indicators provide a sense of completion, encouraging respondents to stay engaged.

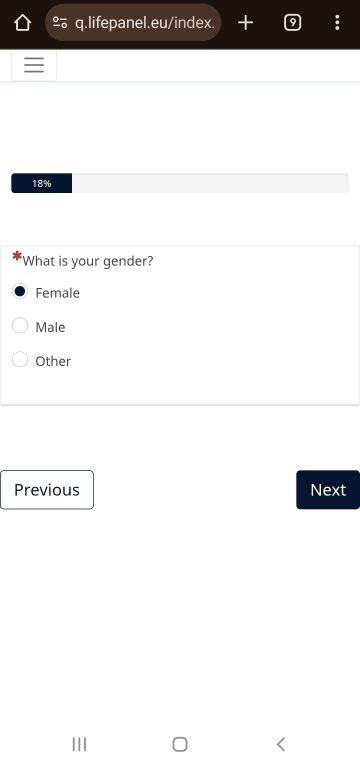

A survey that isn’t optimized for smaller screens can frustrate respondents, leading to incomplete answers or abandonment of the survey entirely. Questions should be clearly legible on mobile screens, and answer options should be easy to select without causing frustration. Navigation should be seamless, meaning that respondents should be able to easily move between questions without encountering delays or errors. The use of large, clickable buttons, simple text, and minimal scrolling improves the overall user experience. Additionally, surveys should load quickly to prevent any delays or lags that could reduce respondent engagement.

For example, a survey that automatically adjusts to the user’s screen size, with large text and well-spaced questions, ensures that participants remain engaged, regardless of the device they are using.

Pretesting, or pilot testing, is one of the best ways to identify and address potential issues before launching a survey to a broader audience. Conducting a pretest allows researchers to evaluate various aspects of the survey, such as question clarity, flow, and overall length. By testing the survey with a small group, you can determine if respondents are likely to become confused, or disengaged, or if certain questions may be repetitive or redundant.

Additionally, pretesting can help identify any technical issues, such as poor mobile compatibility or problematic navigation. Feedback from pretest respondents can also highlight areas where the survey can be improved, whether it’s through rewording questions for better clarity or adjusting the layout for ease of use.

Once your survey is live, actively monitoring response patterns can help identify potential issues with respondent attentiveness. Straight-lining, or selecting the same response for every question, is a red flag indicating that respondents may not be fully engaged. Similarly, extremely fast completion times can be a sign that someone is rushing through the survey without thoughtfully considering their answers. Inconsistencies in responses, such as contradictory answers (e.g., rating a product highly in one section and poorly in another), can also suggest inattention or random answering.

By analyzing response patterns, researchers can spot these issues early and take corrective measures, such as filtering out responses from inattentive participants or making adjustments to the survey design for future iterations. This active monitoring helps ensure that the data being collected is as accurate and reliable as possible.

Personalization plays a significant role in maintaining respondent engagement and attentiveness. When respondents feel that a survey is directly relevant to them, they are more likely to provide thoughtful and accurate responses. Personalized surveys can be achieved using branching logic, where the questions shown to the respondent depend on their previous answers. This creates a more dynamic and tailored experience that encourages participants to engage more deeply.

For example, if a respondent indicates they have used a product in the past month, the survey can follow up with questions specifically related to their recent experience. Additionally, referencing previous answers can make the survey feel more relevant and personalized, showing respondents that their input matters. This approach increases engagement, reduces the likelihood of respondents abandoning the survey, and encourages them to provide more thoughtful and specific answers.

Ensuring respondent attentiveness is essential for gathering high-quality, reliable data. By optimizing the survey design—through concise and engaging content, diverse question formats, and user-friendly navigation—researchers can effectively minimize inattentiveness. Strategies such as incorporating attention checks, avoiding redundancy, and personalizing the experience foster a more thoughtful and responsive engagement from participants.

Moreover, monitoring response patterns and implementing post-survey quality control measures further enhance data integrity. When respondents are encouraged to stay engaged and provide accurate answers, the insights derived from the survey become more actionable, leading to better-informed decisions and more valuable outcomes. Ultimately, a well-crafted survey that prioritizes respondent experience not only boosts participation but ensures the accuracy and reliability of the data collected.